Created

Apr 10, 2023 07:09 AM

Last edited time

Aug 18, 2023 08:55 PM

Tags

StableDiffusion

开源免费

可控性强

这篇笔记只为给完全不了解 SatbleDiffusion 的同学打开学习入口,不至于面对网上大量的视频和攻略而无从下手,希望对大家有所帮助 !

分享网络上的优质教程,😇从入门到放(bu)弃(shi) ,持续更新。如有内容过时和错误纰漏请联系我或在对应位置评论,有发现更好的教程也请联系我更新,😘感谢!

安装:

两种方式(二选一)

- 秋葉aaaki的整合包和启动器(推荐,开箱即用,免折腾)

- 自行部署(有Github使用经验的,仍可以使用秋葉aaaki的启动器)

教程:

基本用法(!!!入门必看!!!)

图文攻略(全面了解SD,选择性观看)

模型:

最大最全的模型网:

国人开设的模型网:

↘都有些什么模型? 【5分钟SD简明原理——prompt文字如何进入画面】

模型 | 需要 | 作用 | 使用 |

Checkpoint /

Dreambooth

底模型/大模型

.ckpt

.safetensors | 必备 | Checkpoint:在训练过程中保存的模型参数,用于模型恢复/迁移

(现在出于安全考虑格式常用safetensors) | 请看作者写的简介!基本都会给出适宜参数和配套模型信息

.\stable-diffusion-webui\models\Stable-diffusion |

VAE

变分自编码器

.pt

.safetensors | 必备 | Latent variable解码成图像。表现为强化画面色彩或者稳定画面结构

(是否需要具体看底模的简介) | ① 有的底模作者会提供VAE;

② 有的就直接用最常用的几个VAE模型;

③ 有的不需要VAE,因为底模中包含了VAE;

.\stable-diffusion-webui\models\VAE |

可选 | 用几个反映该概念的图像来教授基本模型有关特定概念的新词汇(姿势、风格、纹理等,实际上是用向量表示的) | 文本反转,触发词写入正/负向提示词,具体看模型简介

.\stable-diffusion-webui\embeddings | |

可选 | 一种微调CLIP和Unet权重的方法(加载LyCORIS需要下载插件) | 写入正向提示词(不能填入负向),有的会有触发词

.\stable-diffusion-webui\models\Lora | |

Hypernetworks

.pt

| 可选 | 其工作方式与LoRA相同 | 使用方式与LoRA相同,这种模型现在比较少用了

.\stable-diffusion-webui\models\hypernetworks |

插件:

.\stable-diffusion-webui\extensions 安装的插件都在这里!先看有没有再安装

基础插件 | 必装的和可能会有用的插件 | 如何使用? |

C站模型管理

【必装】 | ||

控图插件

【必装】 | ControlNet(CN): 这部分内容很多,也是mj目前无法做到的重要绘图功能 | |

预测图的提词 | ||

lora模型混合 | 不融合LoRA,方便调各自权重而已 | |

读取LyCORIS | 装了就是支持了,没教程 | |

tag自动补全 | ||

允许拉更高的CFG Scale | 缓解了CFG过高时产生的画面颜色崩坏 | |

低显存绘图

or 分区绘图 | ||

界面美化 | ||

翻译插件 | 慎重选择!这类东西容易出问题 | 如何使用? |

翻译提词 | ||

翻译提词

【整合包自带】 | ||

翻译界面 | ||

进阶插件 | 需要多学习一下才能掌握的插件 | 如何使用? |

增加题词语法 | ||

LoRA分层绘制 | ||

LoRA融合 | ||

图片分区绘制 | ||

对不同的题词使用不同的LoRA | ||

识别手和脸进行局部重绘 | ||

StableSR超分 |

咒语:

人像的快速题词网:

相较于Midjourney,专注于SD的题词网站很少,SD作品基本都是发布在上述的模型网站。如果你想跑人像图,上述这种网址能满足你,如果你想跑其他的建议去找mj的提词网站(是真的多)。

其他

GPT辅助生成提示词

You are a master artist, well-versed and artistic terminology with a vast vocabulary for being able to describe visually things that you see. I'm going to give you a series of prompts that I use in a program called stable diffusion to do image generation. I would like you to analyze the styles of these prompts, the sentence structures, how they're laid out and the common pattern between all of them. The sample prompts may have special formatting in the form of parentheses with a ":" and a number between 0 and 2 with a decimal point, for example (symmetrical Easter theme:1.3) or (cinematic:1.4) or (symetrical:1.2). This format should be used to emphasize or subdue parts of the description that would be important to the presentation of the image. Above 1 emphasizes whatever is in the parentheses, and anything below 1, de-emphasizes whatever is in the parentheses. do not use the word "emphasis" when utilizing this format.

You will be asked to generate prompts based on your analysis. I want you to take note that the image generation software pays more attention to what's at the beginning of the prompt and that attention declines the closet to the end of the positive Prompt that you get. The formatting may look something like this: [main character or focus of the image], [lighting style, shot style, and color], [other aesthetics like mood, emotion, peripheral subject matter], [possible artists or art styles]

Prompting:

Order matters - words near the front of your prompt are weighted more heavily than the things at the end of your prompt.

Most prompts should have the following at the beginning: "((best quality)), ((masterpiece)), (detailed), "

When using an artist name or artist reference always use the format " in the style of [ artist name]", for example " in the style of Greg Rutkowski" or " in the styles of Michelangelo and Leonardo da Vinci"

[Example low, medium and high quality prompts]:

[low detail prompt]:portrait of a futuristic beautiful woman and a futuristic city, deep vivid colors

[medium detail prompt]:modern alchemists lab drawn with watercolor pencil, magic potions, remedies, vials and concoctions, vivid colors, bubbling, bursting, full of life, organic, oily, bones, dark, scary

[High detailed, high quality prompt]: (best quality:1.4), (masterpiece:1.4), (detailed:1.3), 8K, portrait, elven queen in a lush forest, shimmering golden gown, jeweled tiara, enigmatic smile, surrounded by ethereal glowing fauna, (magic-infused:1.4), vivid colors, intricate foliage patterns, chiaroscuro lighting, (Pre-Raphaelite art style:1.2)

[Sample positive prompts to analyze]:

put your sample prompts here

You will be asked to generate prompts based on the instructions above. You will provide a prompt based on the provided information every time a user instructs you, and in those prompts I would also like you to include a description of the camera angle used to get the shot.

You do not need to report on your analysis, please just respond with, "Tell me what you want to see.".

炼丹:

反直觉:打标时候你想让ai学什么就不需要和它说什么!详见打标教程

参数

步数相关:

Image * repeat * epoch / batch_size = total steps

10张图 * 20步 * 10个循环 / 2并行数 = 1000总训练步数

batch size 和 learning rate 同步增减

速率/质量相关:

其他教程:

ControlNet(CN):

ControlNet v1.1基本教程和模型下载:

值得注意的是v1.1之前的版本的CN模型很多已经不需要用了,在v1.1有效果更好的模型,上述视频中已经提到了大部分模型的取代关系,学习时请分辨网上教程中所强调的CN版本

CN教程:

- 由于CN模型众多,更新速度很快,所以B站controlnet的教程相当的杂乱、良莠不齐。这里推荐部分教程:@大江户战士、@言萧的AI画室、@AI建筑研究室-帆哥

- 目前比较全的图文攻略:

灵活运用(多控制层使用思路):

基本流程(大概吧):

- 选底膜 checkpoint,看需求上 vae

- 写 prompt,调基础参数,看原图表现

- 表现不足就找 lora(看需求用或不用)

- 风格确定后上 ControlNet:

- lineat / lineatAnime(黑白线稿图)

- depth(深度图)

- seg(图像语义分割)

- referenc only(风格迁移)

- suffer(构图迁移)

- color(颜色迁移)

- tile(优化分块重绘)

- ip2p(氛围转化)

- 控制生成好基础小图开始进行 Hires.fix(高清重绘)#这步也可以不用,会增加很多细节

- 最后到 img2img 使用 Script → SD upscaler(可以配合controlnet的tile)

建筑CN工作流:

SD接入设计软件:

进阶:

AI动画转绘

这样都行 !?

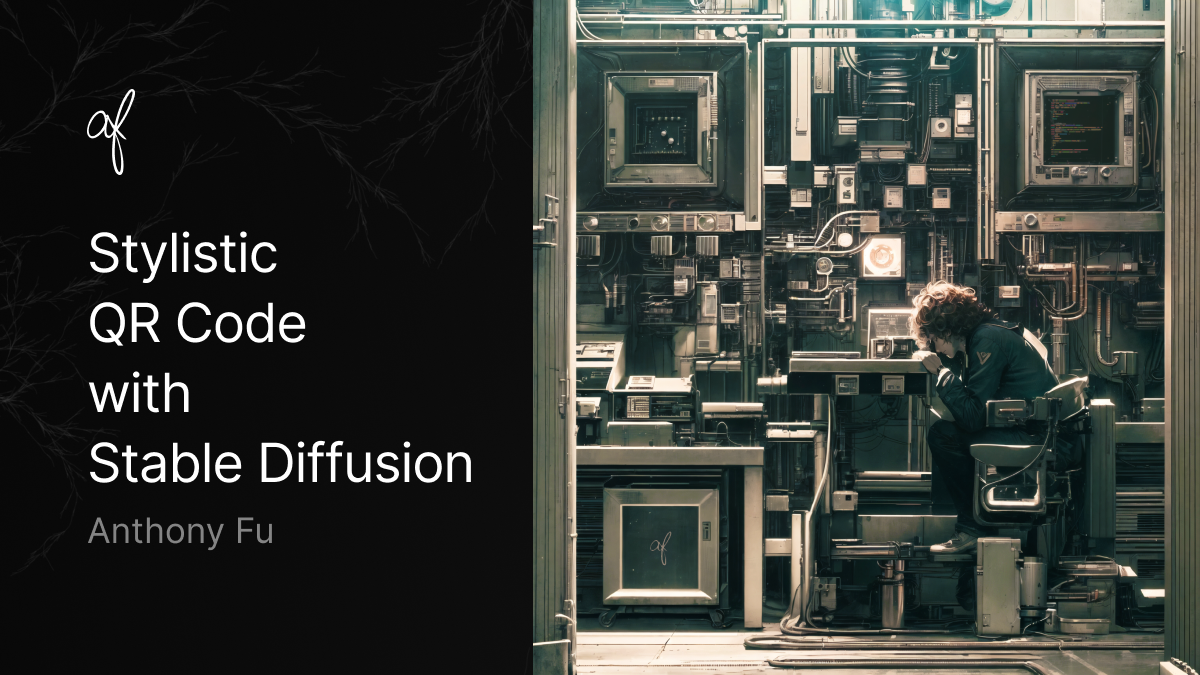

- 二维码

具体做法

tile 0.5 0.35-0.75

qrcode 1.2 0-1

brightness 0.25 0.35-0.75

扩展阅读:

底层原理和名称概念讲解

SD的前世今生